Abstract

To encode allocentric space information of a viewing object, it is important to relate perceptual information in the first-person perspective to the representation of an entire scene which would be constructed before. A substantial number of studies investigated the constructed scene information (e.g., cognitive map). However, only few studies have focused on its influence on perceptual processing. Therefore, we designed a visually guided saccade task requiring monkeys to gaze at objects in different locations on different backgrounds clipped from large self-designed mosaic pictures (parental pictures). In each trial, we presented moving backgrounds prior to object presentations, indicating a frame position of the background image on a parental picture. We recorded single-unit activities from 377 neurons in the posterior inferotemporal (PIT) cortex of two macaques. Equivalent numbers of neurons showed space-related (119 of 377) and object-related (125 of 377) information. The space-related neurons coded the gaze locations and background images jointly rather than separately. These results suggest that PIT neurons represent a particular location within a particular background image. Interestingly, frame positions of background images on parental pictures modulated the space-related responses dependently on parental pictures. As the frame positions could be acquired by only preceding visual experiences, the present results may provide neuronal evidence of a mnemonic effect on current perception, which might represent allocentric object location in a scene beyond the current view.

Original Link: https://doi.org/10.1016/j.pneurobio.2024.102670

As we navigate our environment, our eyes move continuously, capturing the complexity of the world and projecting it onto our retinas. To perceive the environment in its entirety, we integrate the captured images into a coherent visual space, discerning the location and characteristics of objects around us. The present study (Li, Chen and Naya, 2024) elucidated that preceding visual experiences affect the current perceptual process in the posterior inferotemporal cortex of nonhuman primates to represent allocentric location information of an object in a scene.

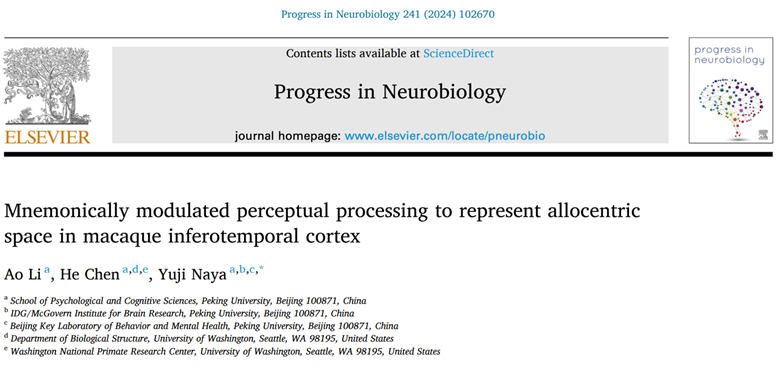

Specifically, through a two-factor experimental design that simultaneously manipulates the gaze location (n=4) and the background stimulus (n=4), researchers were able to explore the relationship between neuronal activity and the retinal projection of images. The nested subplot scene design together with pilot animations subtly facilitates the investigation of how the mental representation of an integrated scene influences current perception (Figure. 1).

Figure 1. Task design and recording sites

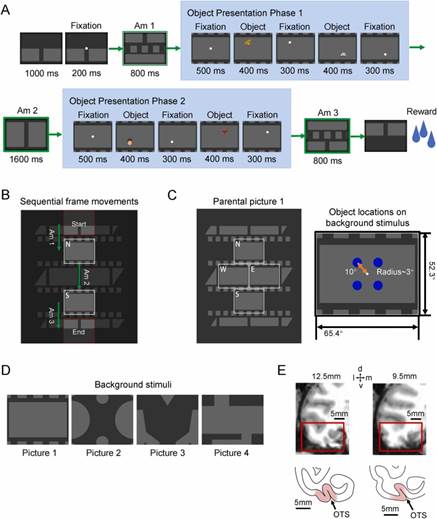

The results showed that posterior inferotemporal (PIT) neurons encoding spatial information tend to be jointly modulated by both gaze location and background stimulus (51 out of 119 cells, 42.9%) (Figure. 2). This suggests that the modulation arises from a combined influence of gaze location and background stimulus (i.e. a view-centered background signal) (Chen and Naya, 2020a & 2020b), rather than from the independent convergence of the two factors on individual neurons.

Figure 2. Example of a PIT cell encoding spatial information and representing a combination of gaze location and background stimulus.

TL, top left; TR, top right; BL, bottom left; BR, bottom right.

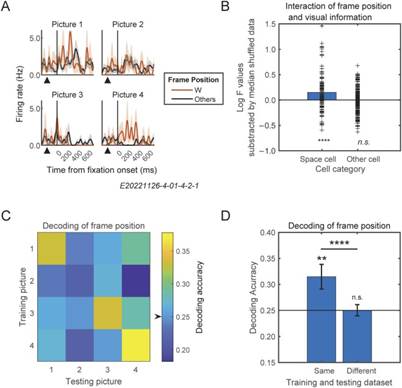

Interestingly, using a gaze-only paradigm which does not require active encoding, they further found that PIT space-related neurons tend to be modulated by the frame position within the scene (Figure. 3). What’s more, this modulation tend to be restricted to a frame position within the parent scene to which the current background image belongs, rather than a general frame position across scenes (e.g., upward, leftward relative to the center in all scene pictures), suggesting that the view-center background signal reflecting the current perceptual information may be modulated by mnemonic signals derived from preceding visual experiences to look around a scene, thereby encoding the current gaze location relative to a global scenery (i.e. allocentric spatial encoding).

Figure 3. Mnemonic effect on representation of current visual perception at single neuron and population level. A space-related cell’s responses in best frame position (W, orange) and other frame positions (average, black) within each background stimulus was shown in A.

By conducting a series of studies using delicate visual perception and memory paradigms, Naya lab’s studies have systematically revealed the subtle “where” information in the conventional “what” path. Considering the effortless encoding properties, the view-center background signal may be an ideal channel to connect different cognitive processes such as perception and memory, serving the downstream functional neurons such as spatial view cells (Rolls et al., 1997) in the hippocampus of non-human primates.

Reference:

Chen, H., & Naya, Y. (2020a). Automatic Encoding of a View-Centered Background Image in the Macaque Temporal Lobe. Cerebral Cortex, 30(12), 6270–6283. https://doi.org/10.1093/cercor/bhaa183

Chen, H., & Naya, Y. (2020b). Forward Processing of Object–Location Association from the Ventral Stream to Medial Temporal Lobe in Nonhuman Primates. Cerebral Cortex, 30(3), 1260–1271. https://doi.org/10.1093/cercor/bhz164

Li, A., Chen, H., & Naya, Y. (2024). Mnemonically modulated perceptual processing to represent allocentric space in macaque inferotemporal cortex. Progress in Neurobiology, 241, 102670. https://doi.org/10.1016/j.pneurobio.2024.102670

Rolls, E. T., Robertson, R. G., & Georges‐François, P. (1997). Spatial View Cells in the Primate Hippocampus. European Journal of Neuroscience, 9(8), 1789–1794. https://doi.org/10.1111/j.1460-9568.1997.tb01538.x